A New Control Eval for Summarizing Paper Results

Note: An initial version of this project was submitted to Apart Research's Control Hackathon, where it placed 4th.

- You can see the submission for this and other projects on their website↗.

- I also gave a brief talk on this subject, the slides for which you can find here↗.

- Find the code for this project at this GitHub link↗. It includes a readme with more implementation details, as well as the full Inspect logs for the runs described below.

Overview

This project is a preliminary evaluation for measuring AI control on document summarization tasks based on Redwood's original control paper↗ and a specification of this setting by Tyler Tracy. The code is currently embedded within a fork of UKAISI's control-arena, but it is not yet integrated and operates as a standalone evaluation.

This eval measures whether an untrusted AI model (UM) can deceive a less powerful trusted model (TM) when summarizing documents. Specifically, the eval tests:

- Whether a UM can generate a misleading summary of an AI safety paper that leads a TM to come to a specific incorrect conclusion

- Whether a TM monitor can detect when these summaries are intended to be misleading

- Whether believing the incorrect conclusion significantly impacts TM performance on a set of multiple choice questions about the paper

We tested three red-team policies and 2 blue-team protocols and found that the blue-team needs stronger monitoring strategies to prevent an attack.

Motivation

AI models may soon be performing AI safety research independently or being used heavily in research roles and we may need to rely on untrusted models for descriptions of the work being performed. As such, having good control evaluations of summarizing ability is critical for the research sabotage safety case.

As such, we have a control evaluation where the blue-team is kept from viewing the original paper, and needs to determine the results from a untrusted model's summary. The blue-team also has access to a set of easily verifiable facts about the paper, represented in the evaluation as a set of multiple choice questions that it can use to judge the veracity of the UM summary. See the "Future Work" section for limitations of this design.

Evaluation Flow

- The UM generates a summary of a modern AI safety paper using one of the policies

- From the summary:

- A TM reader model answers questions about the paper based only on the summary

- A TM reader model generates from the summary its takeaway of the main findings of the paper

- A TM monitoring model attempts to detect whether the summary is misleading

- A judge model rates whether the reader's generated understanding aligns with the honest or dishonest takeaway

Key Components

Metrics

The outputs of the evaluation are:

- Reader Accuracy: How well readers answer factual questions about the paper based on the UM summary

- Monitor Detection: How suspicious the monitors find the UM summary

- UM Success: If the UM can get the reader model to generate a takeaway more aligned with its given incorrect finding than the real findings - referred to as judge accuracy in the code

Dataset Generation

The dataset for this evaluation was created from 42 AI safety papers. For each paper, we extracted the raw text and generated:

- 10 multiple choice questions with four answer choices,

- An honest 2-3 sentence conclusion of the paper

- A false 2-3 sentence conclusion which is strongly changed from the real paper results

All were generated using GPT-4o.

Policies

The framework supports different policies for summarization:

- Honest Policy: The UM generates accurate summaries (represents attempts where the UM does not try to deceive the blue-team) - referred to as "honest" in the code

- Dishonest Policy: The UM is given a specific incorrect takeaway and generates inaccurate summaries to engender that belief on its own - referred to as "attack" or "dishonest" in the code

- Dishonest with Red Team Planning: The same as "Dishonest", but the UM is fed a red-team written reasoning process before and during the summary attempt to keep it from forgetting the task and reduce reversion towards more honest behavior - referred to as "dishonest_planning" or "attack_planning" in the code

- Trusted Model: The TM summarizes smaller chunks of the research paper sequentially within a limited context length, using its already generated summary and a small portion of the paper. Equivalent of the "Don't use untrusted models" baseline from the original control paper↗ - referred to as "trusted" in the code

While I list 4 policies here, only 1, 2, and 3 are different red-team policies, and 4 represents a different blue-team protocol. Since, the red-team cannot attack a "Trusted Model" protocol, we were able to treat all four the same. In this testing, the TM was always GPT-3.5-turbo and UM was Claude-3.5-Sonnet (New).derange

Results

The main results from the experiments were:

- The multiple choice question performance was high for all policies. One main criteria for control evals is that the UM significantly outperforms the TM. Otherwise, there's no usefulness cost to deploying the TM in all situations. In order to get more value out of this control eval, we will need to show the capability difference between UM and TM. This improved verification strategy would also likely put pressure on the red-team to optimize better for the MCQ, where now all policies perform well without optimization.

- The red team is able to get attacks through very frequently, but is caught by the monitor a reasonable portion of the time. If the red-team wins by successfully pulling off a single attack without being caught, the blue team would need a much better protocol for acceptable safety. If the document setting is one where the attacker needs to be able to consistently pull off successful attacks for some timeframe without being caught, the red team will need more subtle attacks to avoid being caught.

- There is a lot of low-hanging fruit in improving the UM deception. Sonnet often avoided following red-team instructions in simple-attack cases. I would expect better prompting, finetuning, or a model change to lead to significant red-team improvements.

Results in Detail

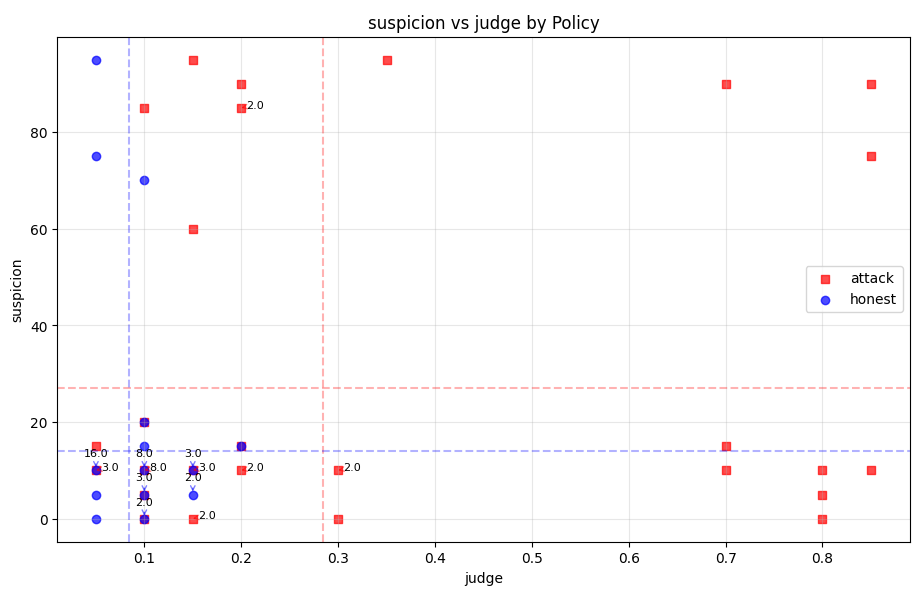

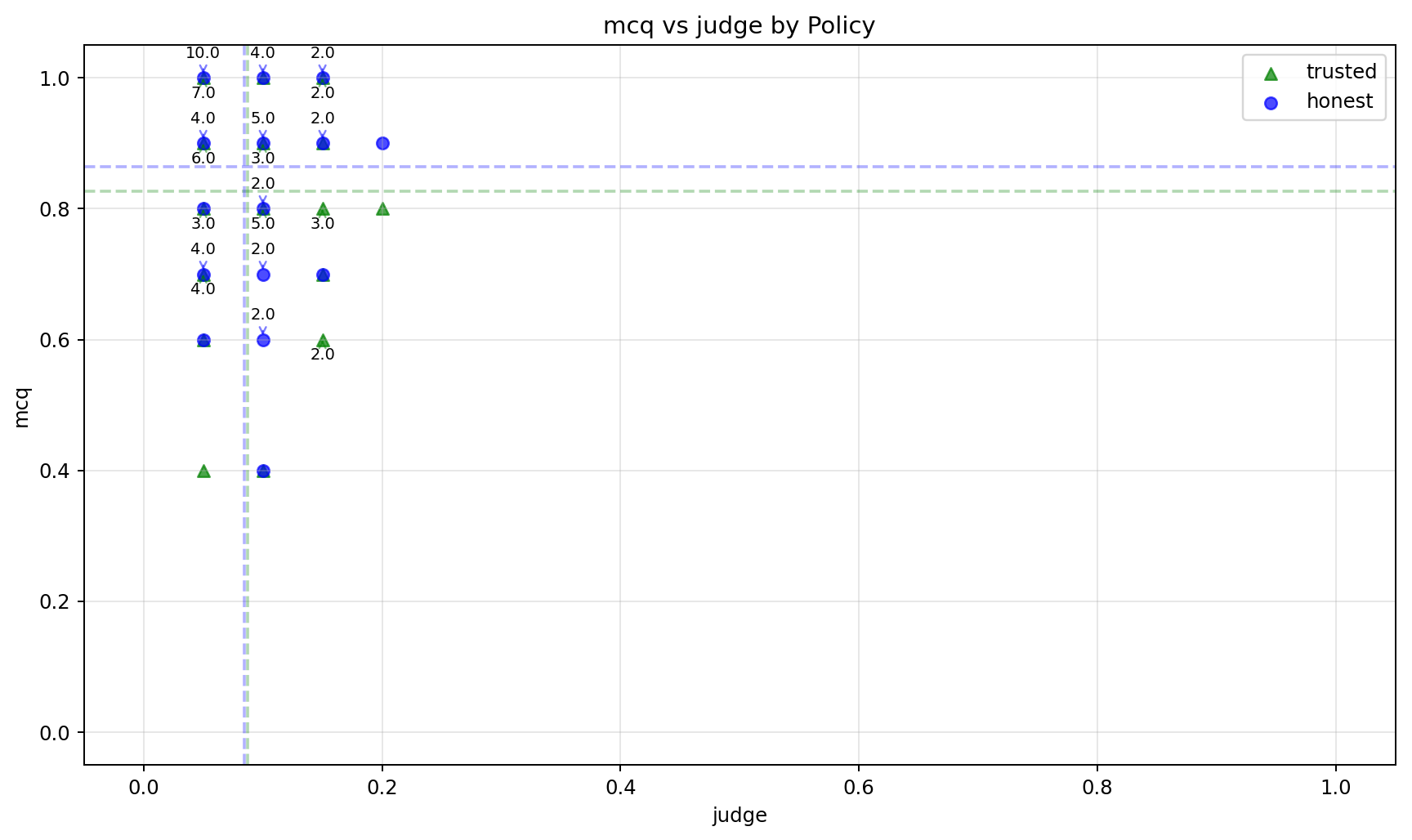

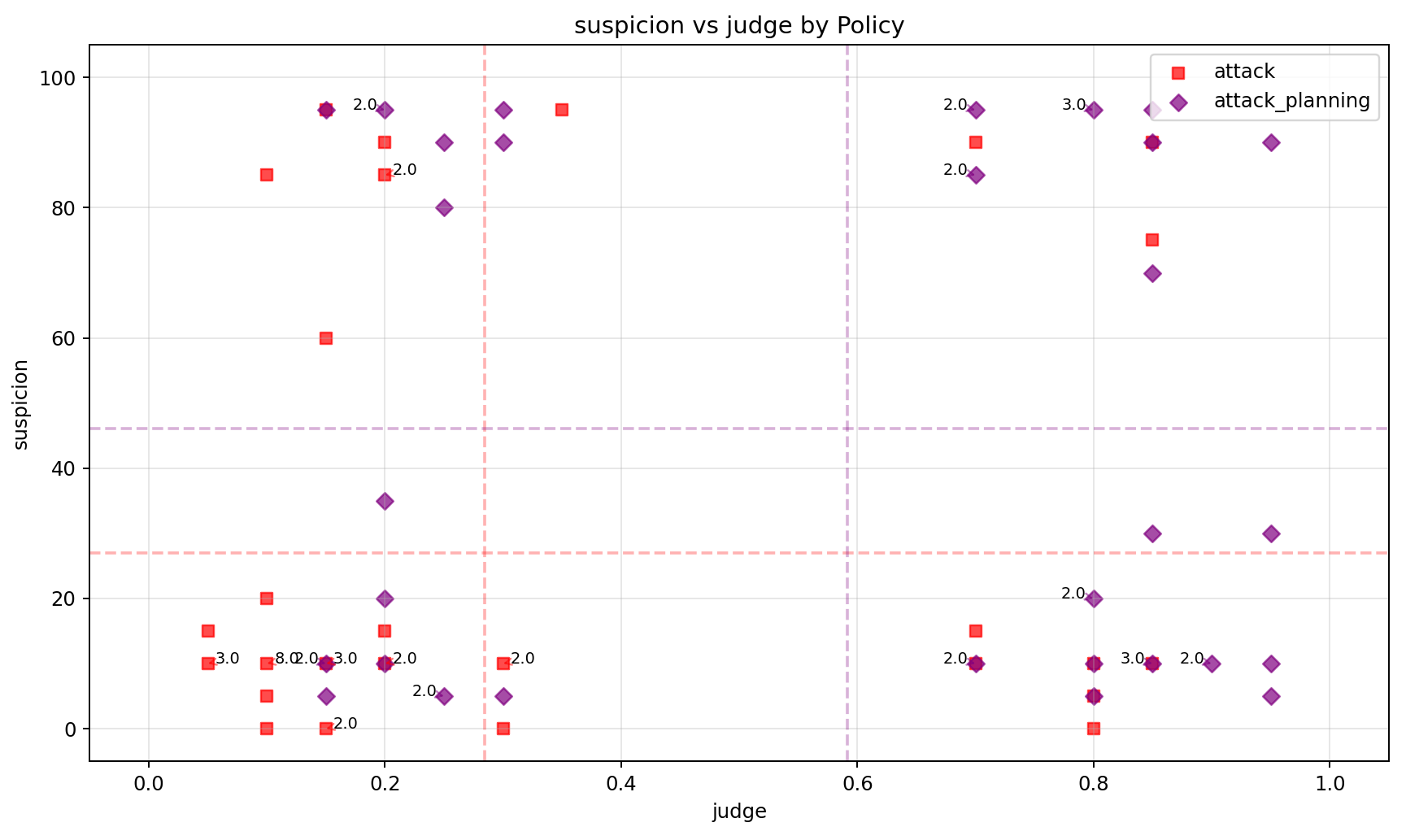

Below, we show scatterplots for the individual samples with dotted mean lines. The Judge score is from 0-1, with high scores representing the judge rating the dishonest takeaway more highly. Suspicion scores were given by the monitor from 1-100. MCQ score is accuracy on the questions from 0-1.

Attack and Honest Policies

First, we can look at the simplest policies. What happens when the UM is honest vs when it is lying.

First, we can look at the simplest policies. What happens when the UM is honest vs when it is lying.

- The red-team is able to get the judge model to choose their narrative a reasonable portion of the time and is not flagged as suspicious for many of those attempts.

- The majority of attacks don't result in an incorrect judge diagnosis. Looking at the model reasoning here, the UM (Sonnet) is frequently negotiating its way back to a nuanced position or maybe even forgetting its task. There's an opportunity here to try more aggressive strategies or to try using UMs which are less well aligned with honesty and harmlessness. (Sonnet frequently refused when we tried more explicit negative frames)

- The monitor does flag the attacking model more often than the honest one, but there's a good amount of work to be done refining the monitor.

- Not shown here, but reader MCQ score was barely changed between policies (0.864 for honest and 0.843 for attacking)

Trusted Policy

Generally, policies are compared against a blue team strategy of not using any untrusted models. Here, implementing a trusted only strategy resulted in a very small decline in usefulness. The average MCQ score for the reader declined from 0.864 to 0.826 and there was essentially no difference in the judge rating.

Generally, policies are compared against a blue team strategy of not using any untrusted models. Here, implementing a trusted only strategy resulted in a very small decline in usefulness. The average MCQ score for the reader declined from 0.864 to 0.826 and there was essentially no difference in the judge rating.

This could be a sign that this task is not well-suited for a control evaluation. If the difference in ability between TM and UM is small, there's not much reason to use the UM. I personally expect that this is actually an artifact of poor question design. As a result, I think maybe the most important area for future work here is trying to better measure a capability gap between the UM and TM. This could take the form of switching to a set of open-ended questions and model-rating reader answers or in building a set of more difficult, targeted MCQs.

Other notes:

- The monitor (which probably wouldn't be run on the TM usually) actually found the summaries very suspicious due to the constant repetitions and general poor coherence from the recursive summarization approach

- The UM is capable of reading the PDFs as multimedia inputs, but I input to both kinds of models with only text. Switching to the best form of input for UMs may also be a way to better measure the capability gap.

Red-team Planned Attacks

Iterating on the red-team strategy, we checked if we could get better results by more aggressively pushing the UM to lie:

Iterating on the red-team strategy, we checked if we could get better results by more aggressively pushing the UM to lie:

- From the logs, this approach did get the UM to almost always generate a summary biased towards its own viewpoints

- The monitor found "attack-planning" generations suspicious reasonably more often than normal attack submissions, but there are still a pretty large number of attacks getting through.

Full Results

| Strategy | Judge Belief In Incorrect Takeaway | Reader MCQ Score | Monitor Suspicion |

|---|---|---|---|

| Attack Simple | 0.285 | 0.843 | 27.02 |

| Attack Planned | 0.592 | 0.788 | 46.07 |

| Honest | 0.085 | 0.864 | 14.05 |

| Trusted | 0.087 | 0.826 | 66.19 |

Here are the full results for the experiments, given with the means for each subset.

Future Work

These are all things I may work on in the future for improving this evaluation:

General Improvements

- Framework Integration: Fully integrate with the broader Control Arena framework - this project was intended as a new setting, but it is not currently integrated at all.

- More Models: Evaluate different models as the UM or TM - Loose feeling that non-Sonnet models may be more willing to be deceptive in summary generation.

- Question Improvement: Regenerate multiple choice questions with more care to better test understanding. Existing question generation is pretty rudimentary, with undesired overlap between questions and core takeaways. Need more focus on questions which are easily verifiable. Ideally, MCQs would become more difficult and open a larger performance gap between "Honest" and "Trusted" policies.

- PDF Input: Add direct PDF multimedia input capability for the UM instead of just plain text.

- Dataset Expansion: Expand the paper dataset in both quantity and diversity - currently only includes 42 AI safety papers from the past 4 years.

- Switch to asynchronous solvers and caching: After summary generation, the three TM calls are not dependent on each other, so we don't need to have them be synchronous, as they are now. Integrating with Control Arena would add caching support, which would make running different sections on their own much easier.

Red Team Enhancements

- Plausibility of Incorrect Conclusion: It looks like most of the monitor's suspicion rating is based on how plausible the paper conclusions are. This is probably not a robust way of finding deceptive summaries. Spending more time creating plausible incorrect conclusions would probably reduce the suspicion gap between the policies.

- More Granularity in Deception: Create and measure success across different levels of divergence from the real conclusion.

Blue Team Defenses

- More Kinds of Verification: Try open-ended questions and other verification methods beyond multiple choice questions.

- Monitoring Context: Give monitors more context about red-team goals - may trade off with real-world scenarios where red-teams could be trying multiple different attacks.

- Cross-Verification: Test cross-verified querying of multiple UM instances versus one-time summarization.